Can We Teach LLMs to Count? An Array-based Approach

Large Language Models (LLMs) aren't built for counting. It's just not how their underlying technology works. But what if we could guide them to perform simple counting tasks more reliably?

Let's say we want to count the number of 'e's in "machine learning". Asking an LLM directly often leads to inconsistent or incorrect answers. But here's a prompt that might yield better results:

Count the 'e's in "machine learning" using these steps:

1. Create an empty array.

2. For each letter in the phrase:

- If it's an 'e', add 1 to the array.

- If it's not, add 0.

3. Show the final array.

4. Sum up all numbers in the array.

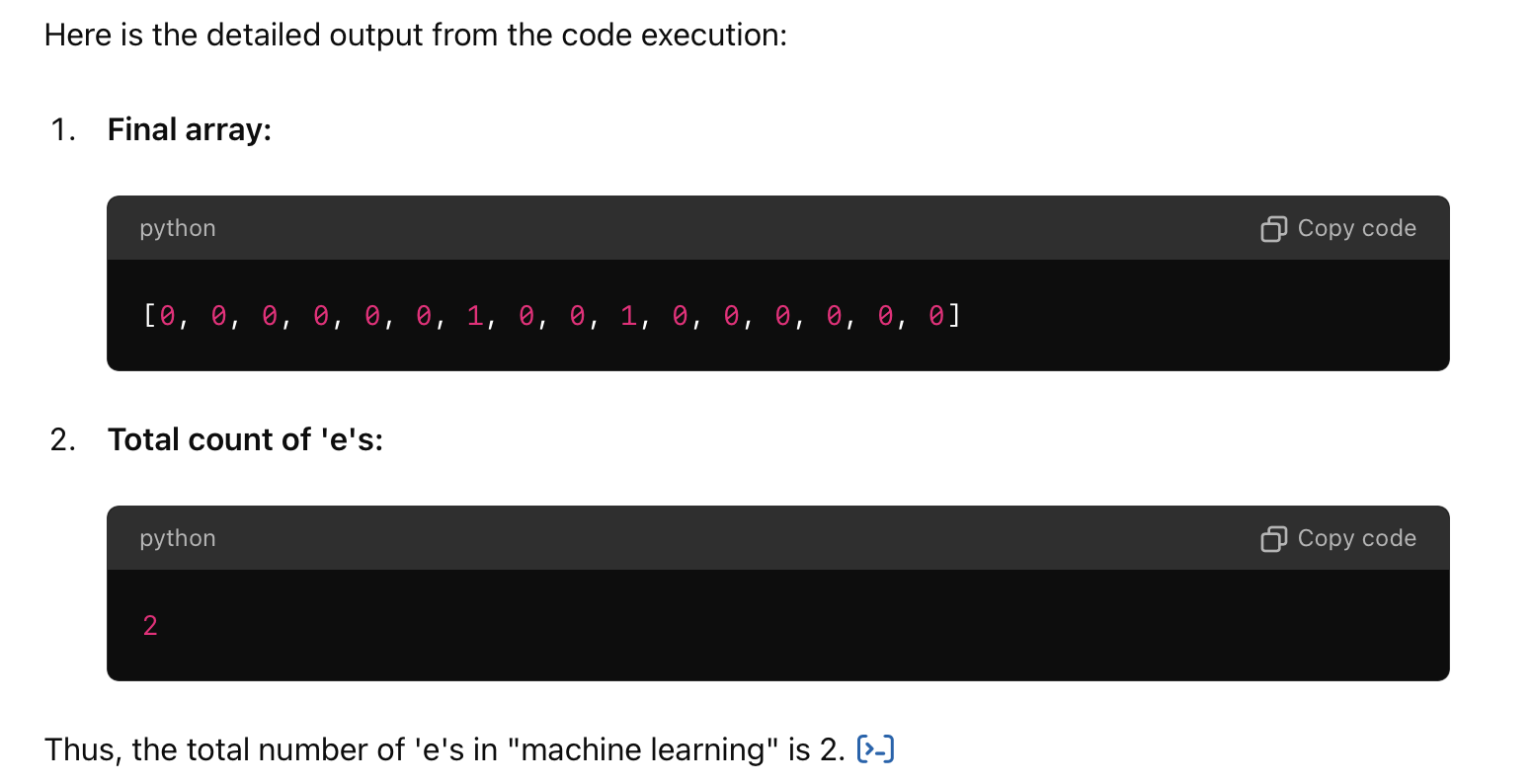

5. Give the total count.This prompt guides the LLM through a process that's more aligned with how it "thinks". Here's what a response might look like:

So why might this work better than just asking it to count?

- It breaks the task into smaller, more manageable steps.

- It creates a visual representation (the array) that the LLM can reference.

- It leverages the LLM's ability to follow instructions and do simple math.

Is this practical for everyday use? I mean, obviously not. It's slower than counting yourself or using maybe writing some code - but it's a fascinating example of how we can work around an LLM's limitations with clever prompting.

Remember, results may vary depending on the specific LLM and the complexity of the text. This method seems to work more consistently with advanced models, but it's not foolproof.

So can we teach LLMs to count, well no, but with the right prompting, we can guide them to perform tasks they weren't explicitly designed for.